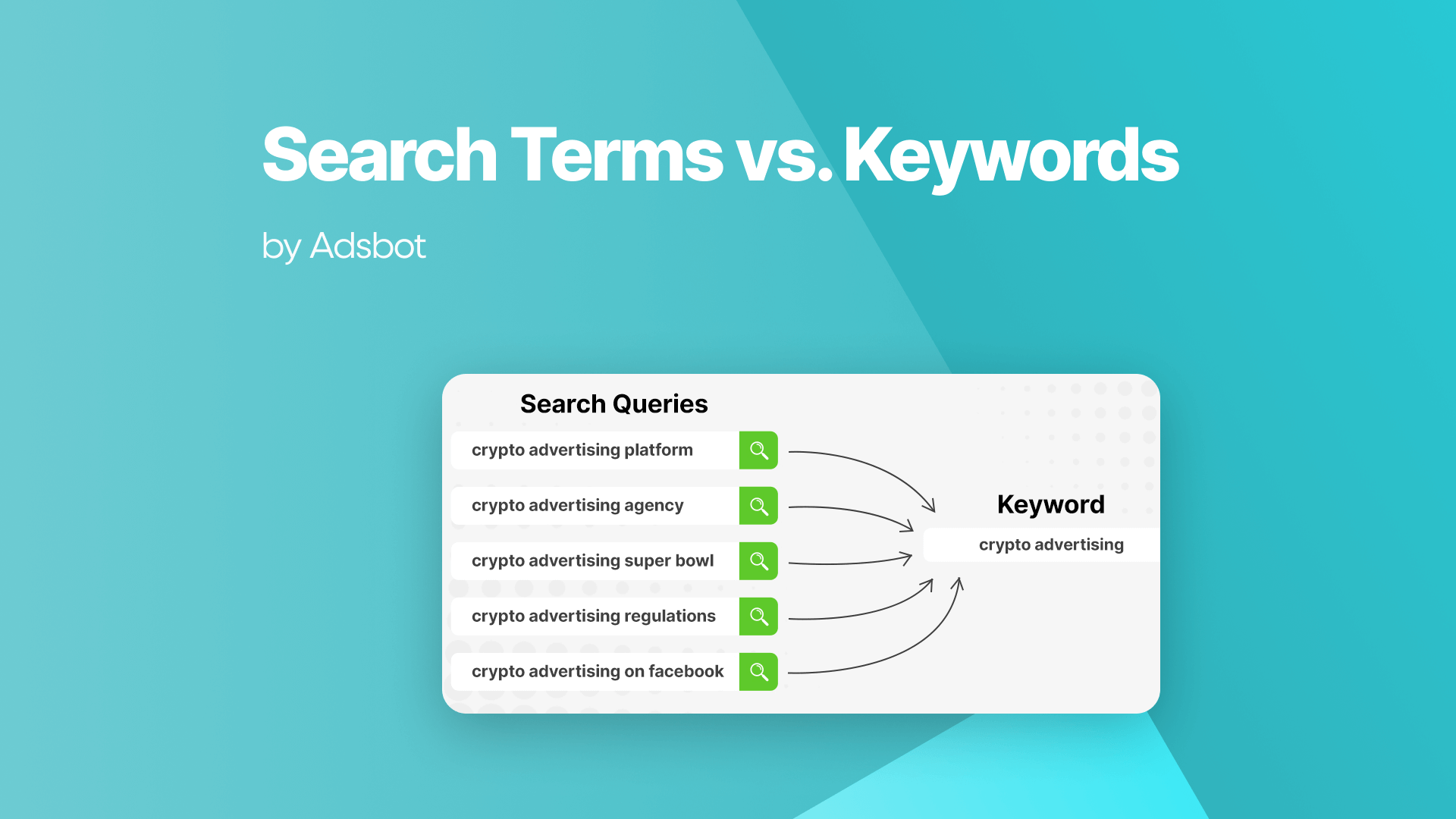

Keywords are the strategic inputs advertisers configure, while search terms are the verbatim queries users execute. In the modern Predictive Era, the relationship between them has decoupled; keywords no longer act as rigid syntactic constraints but as thematic guides for AI. While foundational knowledge is available in The Google Ads Keyword Guide, advanced strategies now require understanding that algorithms prioritize semantic intent over literal spelling.

This shift means a single keyword can trigger ads for thousands of linguistically distinct but contextually relevant search terms. Furthermore, visibility into these terms is increasingly restricted by privacy thresholds, which hide queries failing to meet volume requirements, often masking significant spend. Consequently, strategies have moved toward providing Search Themes and broad signals to guide automation, rather than micromanaging granular query lists.

What is the actual difference between search terms and keywords?

Keywords are the intent signals advertisers provide to the platform, acting as a thematic guide for bidding. Search terms are the actual verbatim queries users type or speak into a search engine. The critical difference lies in the matching mechanism, where modern platforms no longer look for literal syntactic matches but use AI to bridge the gap between a keyword theme and a search term’s predicted intent.

It is a common misconception that keywords are strict commands. In reality, they are merely signals. When you target a keyword, you are asking Google to find users demonstrating intent related to that topic. The search term is the real-world manifestation of that intent. Google has stated that 15% of daily searches are entirely new. This volume of novelty means legacy exact match lists cannot possibly capture all relevant traffic. The system must rely on semantic matching to interpret the meaning behind the query rather than just looking for matching letters.

In our analysis of recent accounts, we frequently see exact match keywords triggering ads for loosely related concepts. For instance, a client bidding on “luxury sedan” recently appeared for a competitor brand name. This overlap makes tools like a Duplicate Keyword Remover essential for maintaining a clean account structure and preventing internal competition. You are no longer matching words but matching the predicted intent behind them.

How has the relationship between keywords and intent changed?

The industry has moved from a Literal Era to a Predictive Era. Previously, the relationship was linear, where a query led to a keyword that triggered an ad. Today, the system prioritizes predicted outcomes. The engine evaluates thousands of signals, including user journey history and context, to match a user to an ad, often treating the keyword merely as a suggestion rather than a command. Knowing How To Optimize Keywords In Google Ads in this environment means prioritizing these user signals over strict syntax.

This shift fundamentally changes how we view account structure. Sarah Stemen, a noted PPC Strategist, correctly identifies that Google is no longer bidding on the query but on the user profile. The algorithm asks if this specific user in this specific moment is likely to convert. If the answer is yes, it may serve as an ad even if the keyword match seems loose. To make these predictions effectively, Smart Bidding generally requires 30 to 50 conversions per month. Without this data density, the predictive model fails to distinguish between high-intent and low-intent users.

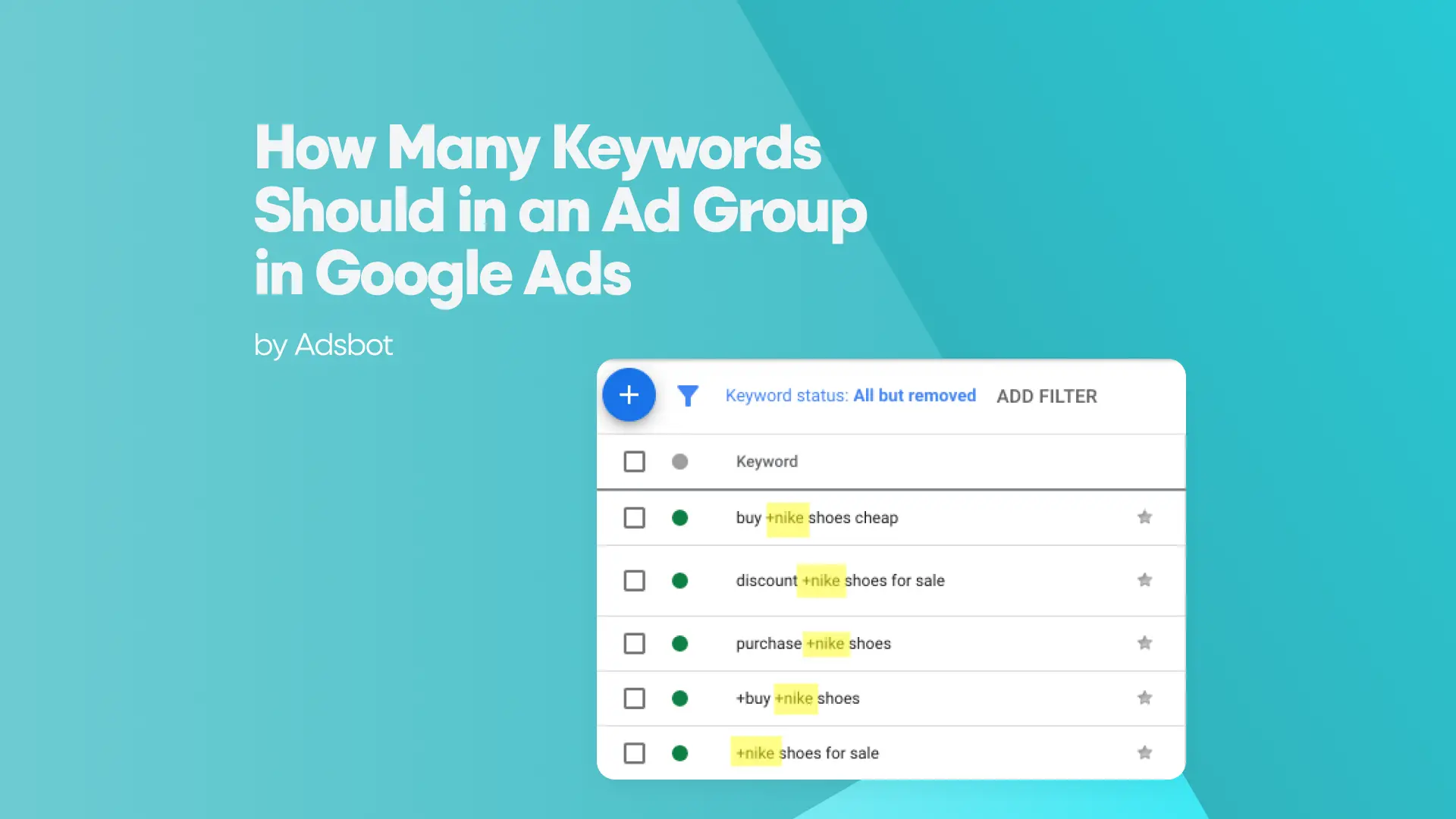

This reality renders the old school Single Keyword Ad Group strategy obsolete. Hyper-segmenting campaigns into granular silos effectively starves the algorithm of the data it needs. We found that consolidating ad groups to aggregate data often improves performance as it gives the system enough volume to optimize effectively. Consequently, the question of How Many Keywords Should Be In An Ad Group In Google Ads has shifted from “as many as possible” to “enough to fuel the AI.”

Why are so many search terms hidden in reports?

Search term visibility is restricted by privacy thresholds. Platforms only report search terms that meet a significant volume threshold across all searches to protect user anonymity. This means advertisers often see a large percentage of spend attributed to “Other” or uncategorized terms, even if those terms generated clicks or conversions.

Advertisers frequently face the frustration of forced inefficiency where significant budget vanishes into the “Other” search term bucket. In our audits of over $20 million in ad spend, we found that up to $0.85 of every dollar spent could potentially affect hidden queries. This lack of transparency is not a performance glitch but a deliberate feature. Ginny Marvin, the Google Ads Liaison, has confirmed that these thresholds are strictly privacy-driven to prevent the identification of individual users based on unique long-tail queries.

Since granular data is no longer guaranteed, strategies must shift. We rely on Search Term Categories within Responsive Search Ads (RSAs) to regain visibility. These categories group the hidden terms into intent-based themes, offering a proxy for the missing data. While you cannot see the exact syntax, you can see if you are paying for competitor brand intent versus cheap free intent. This thematic data is sufficient to make negative keyword decisions at a macro level rather than the micro level.

How do Broad Match and Smart Bidding work together?

Broad Match paired with Smart Bidding is now the industry standard for scalability. Broad Match is the only match type that utilizes all available signals, including landing page content, user location, and previous search history, to find relevant queries. Smart Bidding then acts as a filter, bidding only on the broad match queries that are predicted to meet your ROI targets.

We call this combination the Power Pair of modern search. Data indicates that advertisers switching from Target CPA to Target ROAS (value-based bidding) while using this combination see an average 14% increase in conversion value. Furthermore, 62% of advertisers using Smart Bidding have adopted Broad Match as their primary match type. The mechanism behind this success is Query-Level Learning. Unlike exact match, where data is siloed, broad match allows learnings from one successful query to instantly apply to other semantically related queries across the account.

However, executing this strategy requires strict guardrails. Before worrying about How To Add Keywords To Google Ads for expansion, you must ensure your conversion tracking is impeccable. If the system does not receive high-quality data, such as offline conversions or enhanced conversions, it will optimize for low-quality volume. You must train the Smart Bidding model on what a qualified lead looks like. Without this feedback loop, Broad Match will rapidly spend budget on irrelevant intent simply because the signal strength looked promising to the AI.

What are Search Themes, and how do they replace keywords?

In automated campaigns like Performance Max, Search Themes have replaced traditional keywords. These are optional inputs that let advertisers provide the AI with up to 25 words or phrases representing how customers search. Unlike keywords, which function as rigid targeting mechanisms, Search Themes act as signals to jumpstart the learning phase of the AI or guide it toward new intent categories.

Search Themes fundamentally differ from search keywords regarding auction priority. They share Priority #2 in the auction hierarchy, sitting below Exact Match Search keywords but alongside Phrase and Broad match types. This distinction is vital. If you have an exact match keyword identical to a Search Theme, the keyword takes precedence. However, if no exact match exists, the Search Theme competes on equal footing with broad match logic to find the best user.

For new campaigns with zero conversion history, these themes are essential for avoiding the cold start problem. Without historical data, the AI lacks direction on who to target. Inputting high-intent Search Themes, such as specific competitor names or problem-solution phrases, accelerates the exit from the learning phase. We found that providing these specific inputs reduces the time it takes for the algorithm to stabilize performance by giving it an initial map of high-value intent.

How does AI Max for Search utilize search terms?

AI Max for Search is a suite of features that leverages broad match technology and keywordless targeting to capture intent. It utilizes Search Term Matching to expand reach beyond existing keyword lists and Asset Optimization to dynamically tailor ad copy and landing pages via Final URL Expansion to the specific user query, functioning similarly to Dynamic Search Ads but with generative AI capabilities.

The engine uses Final URL Expansion (FUE) to scan your website and select the most relevant landing page for a query, even if you haven’t explicitly built a keyword for it. Campaigns utilizing AI Max generally see an average 18% increase in unique search query categories with conversions. This allows advertisers to capture long-tail traffic that traditional keyword lists inevitably miss due to the sheer volume of daily novel searches.

However, relying on FUE requires strict oversight. In our audits, we often see unmonitored AI traffic landing on non-converting pages like “Blog” posts or “Careers” sections simply because the text matched the query. To prevent this waste, you must rigorously apply URL Exclusions. By excluding informational subfolders, you force the AI to direct traffic only to commercial pages, ensuring that the expanded reach translates into revenue rather than empty clicks.

How should I manage negative keywords in a predictive ecosystem?

Negative keywords are now the primary lever for steering AI. Mastering Negative Keywords in Google Ads is crucial because manual blocking of every misspelling is no longer necessary as the system accounts for semantic intent automatically. Strategies must shift from micro-management where you block every variant, to macro-management where you block entire themes or concepts to avoid over-constricting the ability of the AI to find relevant volume.

In the past, managing negatives meant adding thousands of close variants. Today, Google automatically handles misspellings for negative keywords, rendering those massive spreadsheet lists useless. We now prioritize Account-Level Negative Keywords to establish global control across both Search and Performance Max campaigns. This feature ensures that if you sell high-end furniture, you can globally block terms like cheap or used without applying lists to fifty individual campaigns.

- Brand Exclusions: For Performance Max specifically, these are non-negotiable for growth-focused accounts. Without this list, PMax often cannibalizes your existing branded traffic because it seeks the path of least resistance to a conversion. By applying a Brand Exclusion list, you force the system to look for new customers rather than harvesting users who were already searching for you. We treat this as traffic sculpting.

Advertisers can apply up to 10,000 negative keywords to Performance Max campaigns. This capacity is sufficient for a macro-level strategy. If you find yourself hitting this limit, you are likely trying to fight the algorithm rather than guide it. The goal is to provide the guardrails, not to build the road.

What is the future of keyword targeting?

We are moving toward a keywordless future where signals replace syntax. Traditional match types will likely consolidate further, eventually leaving only Exact for control and AI Broad for growth. The focus will shift entirely to Generative Engine Optimization (GEO) and Entity Authority, where the AI matches a user’s problem to a brand’s verified solution regardless of the specific words used to search.

The metrics of success are changing. We are transitioning from Share of Voice to Share of Model (SoM). This new metric measures how frequently a Large Language Model recommends your brand as the answer to a user query. In this environment, your keyword list matters less than your entity density and the trust signals you send to the engine. The AI does not care if you bid on the keyword if it does not trust your solution.

In this new reality, keywords evolve into contextual guides or clusters of intent. The winner is no longer the best keyword researcher but the strategist who feeds the AI the best data. We advise clients to stop obsessing over the search term report and start obsessing over the conversion data they send back to the platform. If you feed the system high-quality, verified conversion data, it will find the right users. If you starve it of data, no amount of keyword research will save the account.

Popular Posts

-

How Many Keywords Should Be In an Ad Group in Google Ads?

For the vast majority of modern campaigns, the ideal number…

Read more -

Google Ads Script for Dummies: An Introduction

Imagine you have an e-commerce website that sells licensed superhero…

Read more -

Google Ads Sitelink Character Limits

Your Google Ads are cutting off in the middle of…

Read more -

What Is Conversion Value in Google Ads?

What if you could put a price tag on every…

Read more

Register for our Free 14-day Trial now!

No credit card required, cancel anytime.