If you run a website, you have probably heard about the robots.txt file. This file is crucial because it helps search engines understand which pages they are allowed to crawl and index on your website. However, it can be confusing and challenging to edit and maintain the robots.txt file correctly. This is where the robots.txt checker tool comes in handy! In this blog post, we will discuss what a robots.txt checker is, why it is essential for your website, and how you can use it effectively. So let’s get started!

1. The Importance of Robots.txt for Your Website’s SEO

The robots.txt file is a vital component of every website, especially for SEO. By controlling which pages search engine crawlers can access, website owners can safeguard important information and prioritize the indexing of significant pages. However, if a website does not have a robots.txt file, Google can typically still discover and index all essential pages. For SEO strategists and developers, using the Adsbot robots.txt tester can be a helpful tool in managing and understanding how web spiders crawl a website. Overall, the robots.txt file is one aspect of SEO that should not be overlooked. Adsbot helps you how to check robots txt, through which you can avoid wasting time and money. You can notice early what you need to do for well-optimized ads.

2. Understanding How Google Web Crawlers Use Robots.txt

Google web crawlers use robots.txt to determine which pages of your website they can crawl and index. By specifying which pages to block or allow in your robots.txt file, you can ensure that your website’s visibility on search engines remains optimized. It is important to understand that search engine bots follow the rules set in your robots.txt file, but it does not guarantee that they will always abide by them. Additionally, if you accidentally block important pages, it may negatively impact your website’s SEO. It is crucial to regularly test and validate your robots.txt file to ensure that it is properly optimized for search engine visibility. With the Adsbot robots.txt checker tool, you can check robots.txt on your page for managing and understanding how web spiders crawl a website. By following best practices with the Adsbot robots.txt testing tool and avoiding common mistakes in writing your robots.txt file, you can effectively manage and monitor your website’s accessibility to web crawlers.

3. Using the Robots.txt Tester Tool to Check Blocked URLs

Adsbot robots.txt testing tool is an essential tool for website owners who want to ensure that they have properly blocked unwanted URLs. As discussed previously, the Adsbot robots.txt file helps manage crawler traffic to a website, and the robots.txt testing tool allows webmasters to check if the file is blocking or allowing access to URLs correctly. By simply inputting a blocked or allowed URL into the tester tool, webmasters can quickly determine if the URL is accessible or restricted. It’s important to validate the Adsbot robots.txt checker regularly to ensure that it’s working correctly and not causing any unnecessary SEO issues. However, it’s equally important to be cautious when blocking URLs as excessively blocking important pages can harm a website’s SEO. It’s best practice to consistently monitor and adjust the Adsbot robots.txt tester to optimize the website’s crawlability while avoiding the potential pitfalls of blocking critical pages.

4. How to Test and Validate Your Robots.txt File

To ensure that your website’s robots.txt file is blocking unwanted bots while allowing search engines to crawl and index your pages, it is essential to test and validate it regularly. Adsbot robots.txt testing tool is a tool that will enable you to enter your website’s URL and test its robots.txt file. Adsbot checks for any blocked URLs and resources and helps you identify the rule that is blocking them. By identifying and fixing these issues, you can improve your website’s SEO and prevent search engines from incorrectly indexing content. It is recommended to test and validate your robots.txt file with Adsbot checker after any significant changes to your website to ensure your website continues to rank well in search results.

5. The Risks of Blocking Important Pages with Robots.txt

Blocking important pages with a robots.txt file can have significant risks to a website’s SEO. Although a disallowed URL can still appear in search results, it will not have a description, which can negatively impact click-through rates. Additionally, disallowing URLs means that Google cannot crawl those pages to determine their content, which could cause them to drop from search results. It’s important not to accidentally disallow important pages, as this can significantly impact your website’s traffic and visibility. Therefore, it’s crucial to understand how to properly check and validate your robots.txt file and avoid common mistakes when writing it. Adsbot is a PPC optimization software that also includes a robots.txt testing service. Thanks to the perfect machine learning technology, Adsbot helps your account be well-analyzed, improved, and updated according to real-time customer needs. Proper management and monitoring of the robots.txt file can help ensure that search engines can crawl all necessary pages. You can easily try Adsbot robots.txt checker to see how it works for your company!

6. Common Mistakes to Avoid When Writing Your Robots.txt File

Several errors should be avoided while creating a robots.txt file. One typical error is utilizing incorrect syntax, which might hinder search engine crawlers from seeing your site’s pages. Another error is using a trailing slash when blocking or permitting a URL, which might result in the website not being correctly indexed. It’s important to note that simply disallowing a page only sometimes prevents bots from crawling it. Additionally, having an empty robots.txt file can cause issues with crawling and indexing your site. It’s also important to ensure that your robots.txt file is in the root directory of your site. When you have the Adsbot robots.txt checker tool, you can be sure that Adsbot properly crawls and index your website. By avoiding these common mistakes and regularly testing and validating your robots.txt file, you can ensure that your site is properly crawled and indexed by search engines.

7. How to Allow or Block Specific User Agents with Robots.txt

To allow or block specific user agents with robots.txt, website owners need to understand the syntax of the file. Each rule within the robots.txt file blocks or allows access for all or a specific crawler to a specified file path on the domain or subdomain. Website owners can use the “user-agent” directive to name a specific spider and apply rules to it. Specific rules can be applied to certain user agents, while other user agents might be allowed to crawl the entire site. It is important to note that crawlers will follow the most specific user-agent rules set for them with the name specified in the “user-agent” directive. To ensure proper blocking or allowing of specific user agents, website owners should use the Adsbot robots.txt testing tool. This tool operates like Googlebot and validates that your URL has been properly blocked or allowed based on the user agent rules set in the robots.txt file.

8. How Search Engines Use Robots.txt to Discover Your Sitemap

In section 8 of the blog, we continue exploring the role of the robots.txt file in website SEO. This time, the focus is on how search engines use this file to discover your sitemap. For those unfamiliar, a sitemap is a file that lists all the pages, images, and other relevant content on your website. Adsbot robots.txt checker shows you how to check robots.txt from search engines to your website by crawling. By having a sitemap, you make it easier for search engine crawlers to find and index your pages. However, if you block the sitemap in your robots.txt file, search engines won’t know it exists. In this section, readers will learn how to allow search engine crawlers to access the sitemap in robots.txt and why it’s important for website visibility. As always, the blog offers practical tips and best practices for implementing this strategy.

9. How to Manually Submit Pages Blocked by Robots.txt for Indexing

After identifying blocked pages in Search Console and correcting any errors in the robots.txt file with Adsbot, it may be necessary to manually submit these pages for indexing. This can be done through the URL Inspection tool in Search Console. First, select the blocked URL and click “Request Indexing” to initiate the crawl request. This process may take several days to complete, but once the page is indexed, it should appear in search results. Keep in mind that repeatedly submitting blocked pages for indexing may result in limitations imposed by search engines, so it is important to ensure that the robots.txt file is correctly configured to prevent unnecessary roadblocks for web crawlers. By following best practices with Adsbot robots.txt checker for managing and monitoring the robots.txt file, website owners can optimize their site for search engines while still maintaining control over how content is indexed and displayed to users.

Popular Posts

-

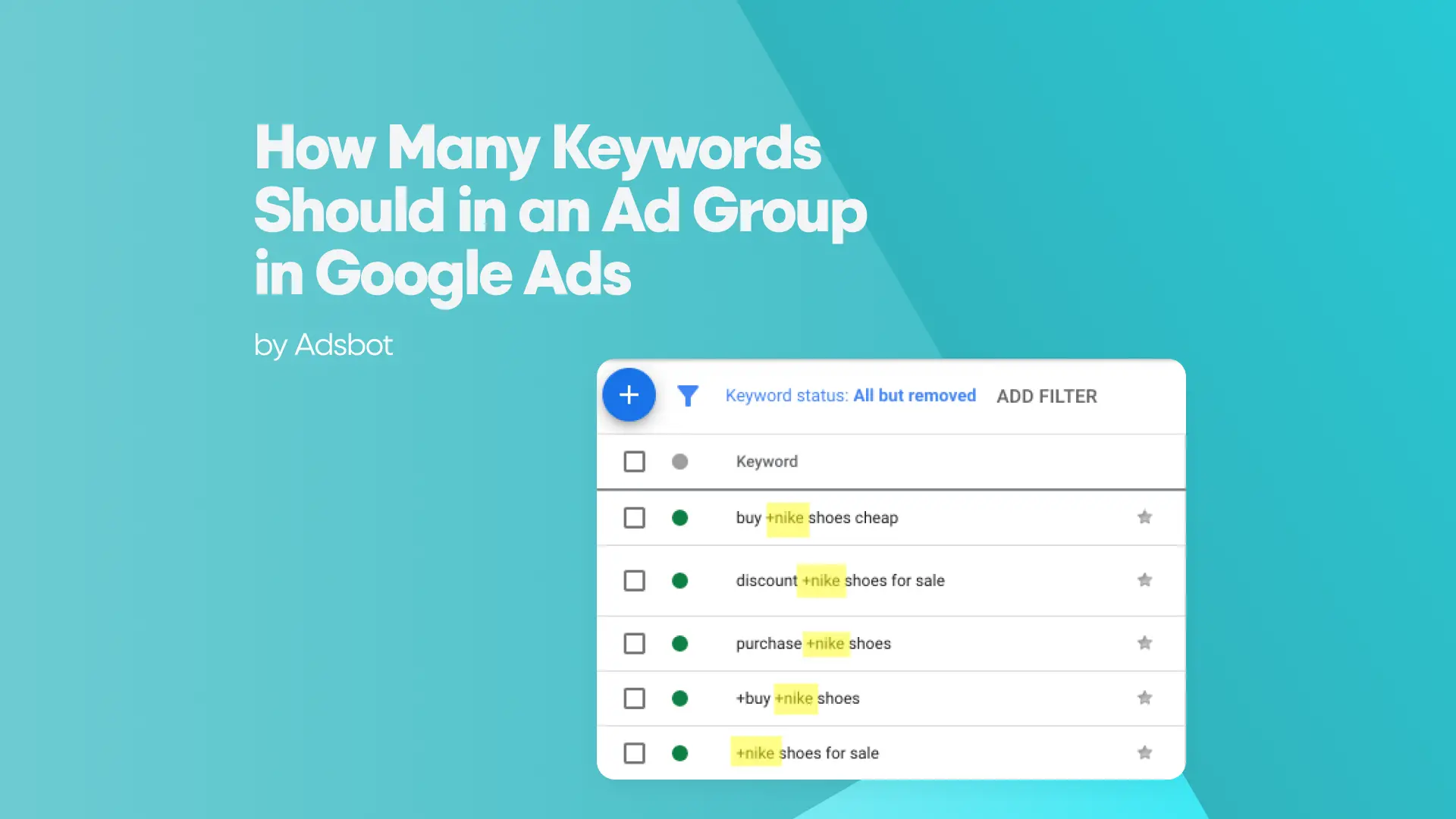

How Many Keywords Should Be In an Ad Group in Google Ads?

Ever wondered if your Google Ads campaigns are packed with…

Read more -

Google Ads Script for Dummies: An Introduction

Imagine you have an e-commerce website that sells licensed superhero…

Read more -

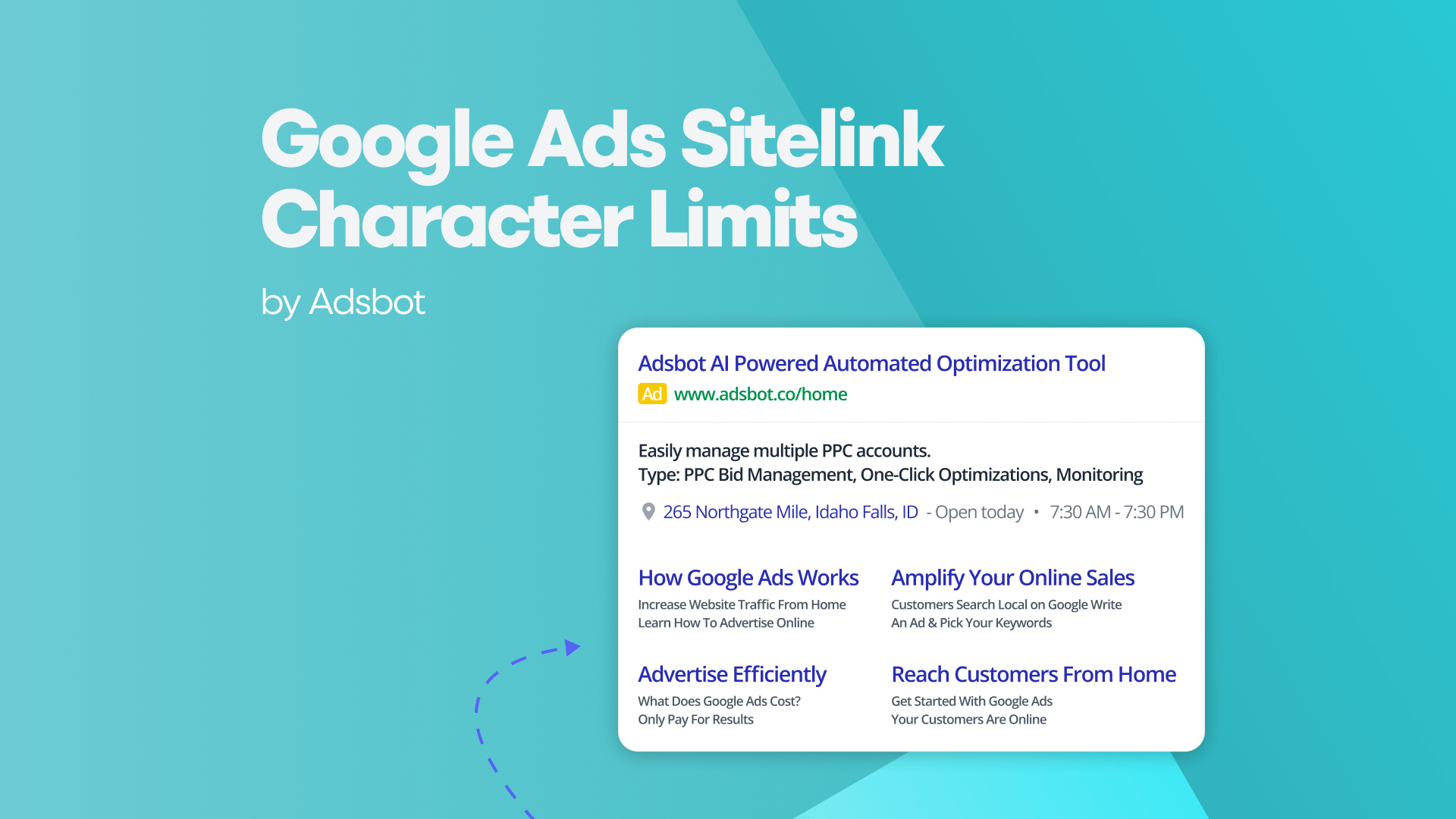

Google Ads Sitelink Character Limits

Your Google Ads are cutting off in the middle of…

Read more -

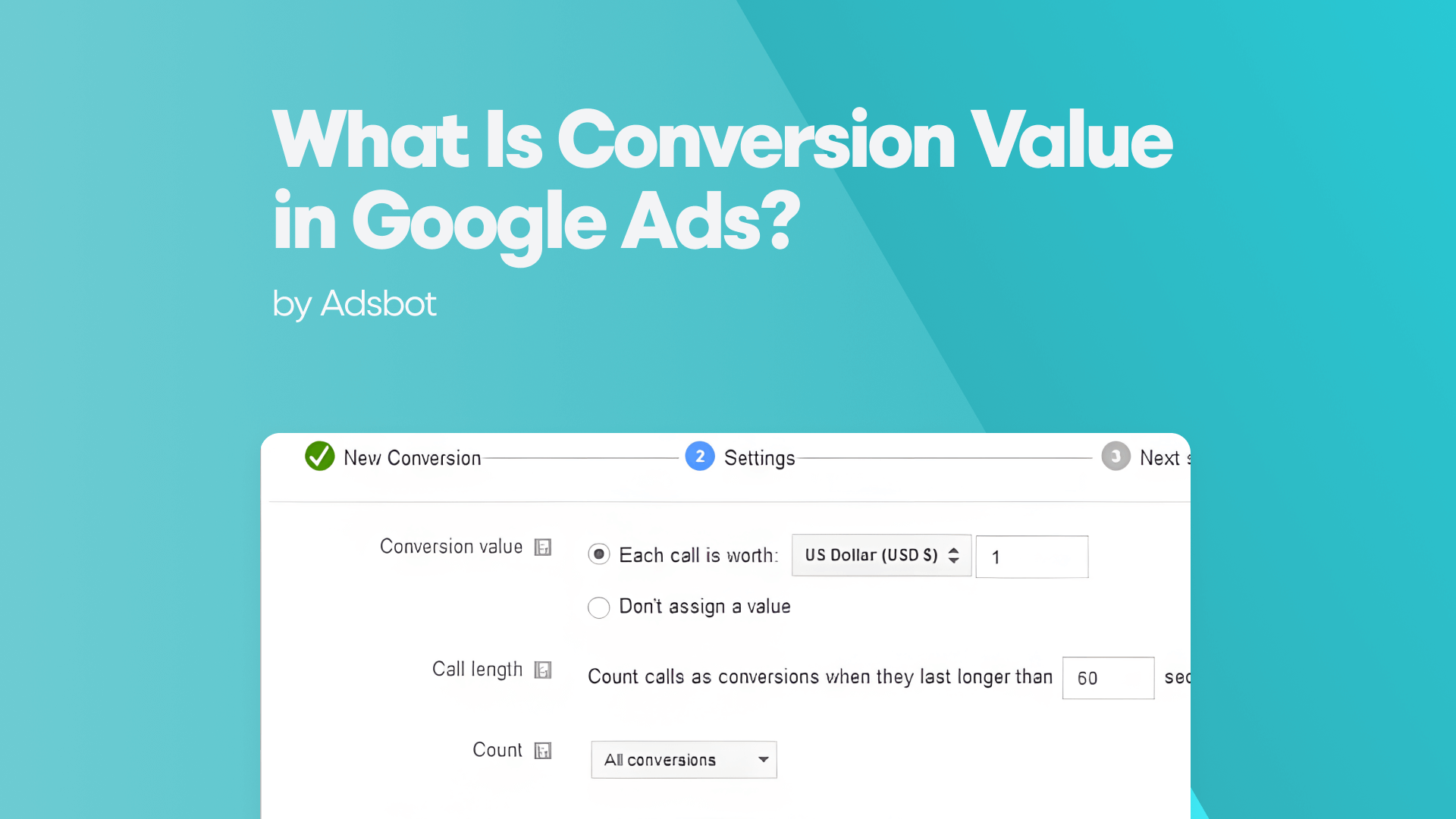

What Is Conversion Value in Google Ads?

What if you could put a price tag on every…

Read more

Register for our Free 14-day Trial now!

No credit card required, cancel anytime.